Multi Arm Bandits And PPC Machine Learning

Last Updated: March 7, 2022

In today’s post, we’re going to be talking about a common PPC machine learning algorithm of which some variant is leveraged in part by many pay per click platforms called a multi arm bandit. We’ll talk about how they compare to simple A/B tests and why understanding the types of algorithms these platforms use is critical to understanding how to launch successful campaigns.

What Is A Multi Arm Bandit?

A multi arm bandit is a type of machine learning algorithm that is commonly leveraged by pay per click platforms in order to find high performing audiences and high performing ads with limited information available about what might be successful upon launching a campaign. These algorithms are a form of multi-variate test that aim to push as much budget as possible into the highest performing variants.

To get a better understanding of what a multi arm bandit is it’s helpful to understand how it compares to running an A/B test.

Running An Ad Campaign With An A/B Test

Imagine an advertiser spending $10,000 a month running a campaign. They want to get as many sales as possible from that $10,000 a month budget, but they don’t know which ad will perform the best in terms of driving those sales. They create two ads and allocate 50% of the budget each to them and launch the campaign.

After spending about $2,500 it becomes clear that one of the ads is performing significantly better and is converting at a 5% rate and generating a positive return on ad spend. The other ad is not performing as well and is converting at a 1% rate, generating a negative ROI. However, since the campaign is configured to run as a simple A/B test, the remainder of the campaign budget is still spent delivering an ad that is converting at a 1% rate and generating a negative ROI.

What may wind up happening in this scenario is that the negative ROI generated from the low performing ad is combined with the positive ROI generated from the high performing ad and makes the campaign appear as though it was a break even campaign and not a success, when in reality a high performing ad was found, but our testing method made the overall campaign results suffer.

Looking At Campaign Testing Differently With A Multi Arm Bandit

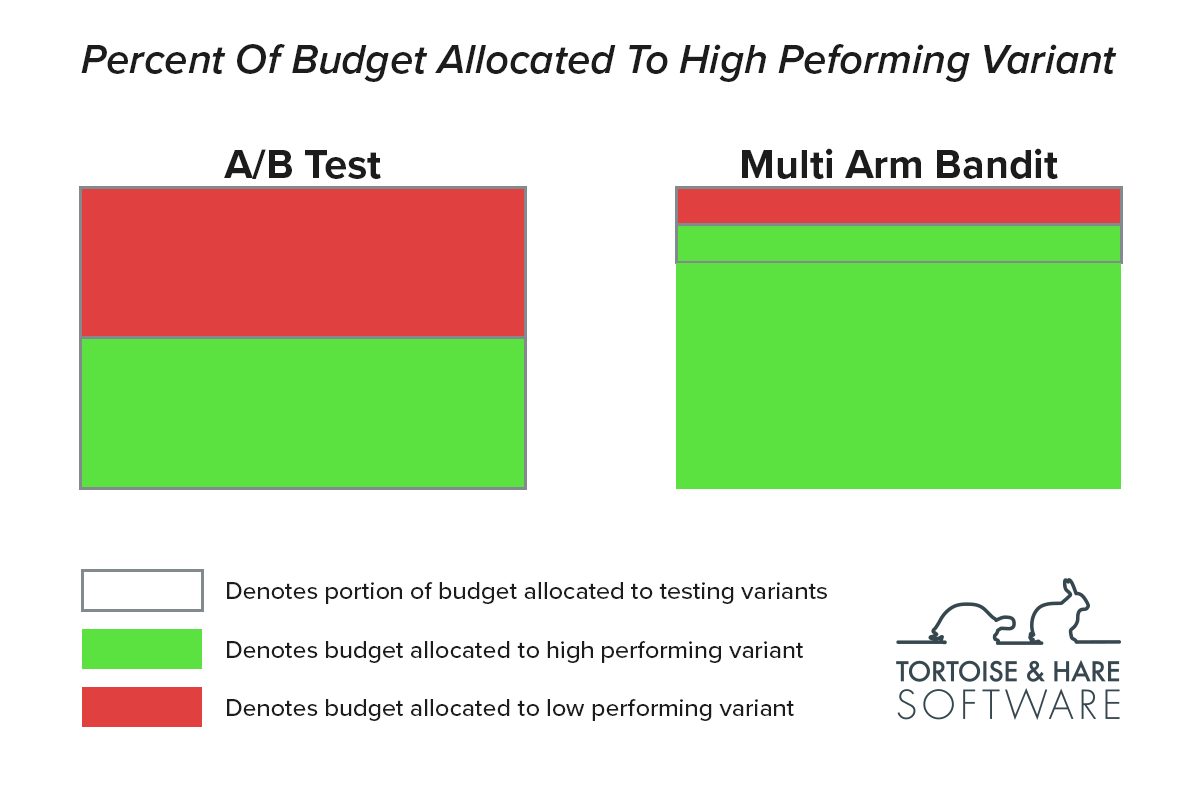

Another way to look at how our campaign was configured in the example above is that we ran a campaign with 100% of the budget allocated to testing. What if we looked at campaign testing differently? We as advertisers still don’t know which ads will be high performing ads from the onset of our campaigns, so testing ad variants is still valuable, but do we need to allocate 100% of our budget to testing? What if instead, we allocated a small portion of our budget to testing and then redirected the core spend over to the highest performing ads instead?

This is exactly what a multi arm bandit algorithm aims to do. It will allocate a portion of the budget to testing ad variants, let’s say 20% of ad spend, and the other 80% will be allocated to spend on what the algorithm currently knows is the best performing variant.

Other Benefits Of Multi Arm Bandits In PPC

It would be great if digital advertising was as simple as two ad variants and static market conditions, but real world advertising is MUCH more complex. PPC platforms leverage dynamic creative to test thousands of different ad combinations and combine those ad combinations with audience data and other factors. Market conditions are also always changing, the recent launch of the war in Ukraine is a perfect example.

Multi arm bandits are a great way to bake in ongoing testing. What constitutes a high performing combination of ad creative and audiences may change over time. These algorithms can learn that a change has happened and redirect spend to the highest performing variants as new information becomes available and high performing creative shifts.

Multi arm bandits are also a good fit for unsupervised advertising. The reality is, there are a lot of amateur advertisers out there who don’t have any real understanding of how PPC platforms actually work and they just want to launch an ad campaign and pray for the best. PPC platforms typically have ways to launch ad campaigns with minimal configuration settings in less than 30 minutes, and ways to deeply configure a variety of settings and let experts fine tune their campaigns to get the best results possible. Multi arm bandit algorithms are a great way to facilitate unsupervised advertising and get the best results possible.

Drawbacks Of Multi Arm Bandits In PPC

It’s pretty well known that you can burn through a lot of money in a hurry via pay per click advertising. If you’ve been paying attention to the article so far, you are probably developing a better understanding as to why. Although multi arm bandits are powerful machine learning algorithms that solve advertising problems in an elegant way, their bias towards action means that a poorly trained algorithm will dump 80% of your budget into utter crap, leaving you with poor results and basically no ROI in a hurry.

Since such a small portion of budgets are allocated to testing, it’s very easy for unsavvy advertisers to see poor results on a campaign and pull the plug before the machine learning has had a chance to identify a high performing variant. Advertisers can get caught in a never ending cycle of launch, analyze, draw the wrong conclusion, pull the plug, and waste their full ad budget with no results.

Final Thoughts

Multi arm bandit algorithms are a staple of PPC advertising and the web in general. They are a common way to identify high performing variants in multi variate testing scenarios with applications across digital advertising, conversion rate optimization, and a range of other activities on the web. Understanding how these algorithms work is essential to understanding how to leverage them effectively. Automated bidding strategies that leverage machine learning are an extremely powerful way to get high ROI from your ad campaigns, but knowing when to use them, how to use them, and how to structure your accounts is a complex topic filled with many subtleties. Working with a PPC consultant to get the most out of your campaigns is an expense that can easily pay for itself. Contact us today and learn more about how we can help.

Leave a Comment